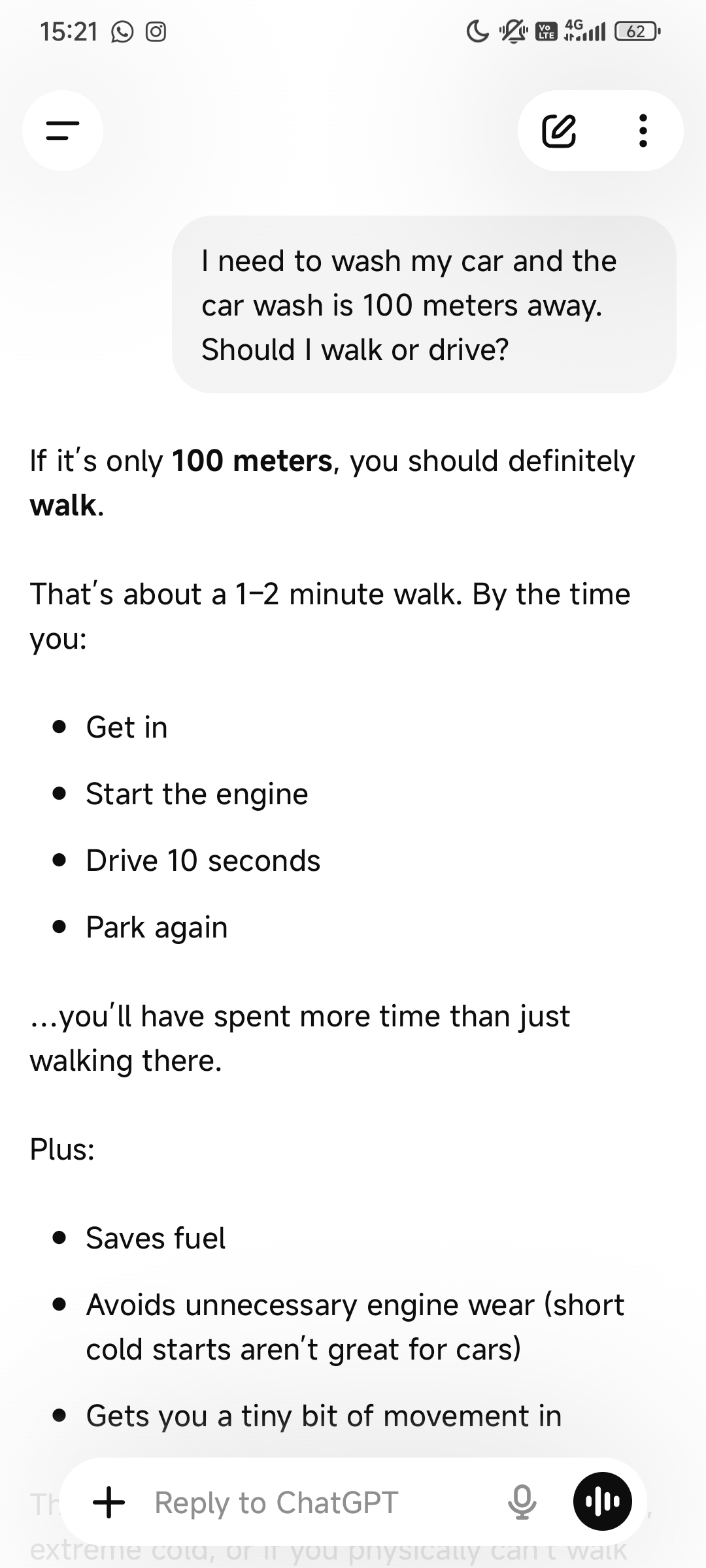

I just did tried in ChatGPT and it said „Walk“. But at the bottom it added: The only reason to drive would be: You need to move the car there directly for washing and can’t push.

“and can’t push” 😭

Indeed

Well done, you managed to find a picture of someone else’s comment added to a third person’s joke,l and you took it seriously. What a great bastion of critical thinking you are!

https://mastodon.world/@knowmadd/116072773118828295

For those who want more

Just a heads up for anyone who may use this in an argument. I just tested on several models and the generated response accounted for the logical fallacy. Unfortunately it isn’t real.

( Funny non-the less )

Tested on GPT-5 mini and it’s real tho?

Edit: Gemini gives different results

Man, I really hate how much they waffle. The only valid response is “You have to drive, because you need your car at the car wash in order to wash it”.

I don’t need an explanation what kind of problem it is, nor a breakdown of the options. I don’t need a bulletpoint list of arguments. I don’t need pros and cons. And I definitely don’t need a verdict.

Gemini’s responses were surprisingly humorous

I used paid models which will be the only ones the LLM bros will care about. Even they kinda know not to glaze the free models. So not surprising

( I have to have the paid models for work, my lead developer is a LLM nut )

It’s basically impossible to tell with these between the example being totally fabricated, true but only happens some small percentage of time, true and happens most of the time but you got lucky, and true and reliable but now the company has patched this specific case because it blew up online.